Disclaimer: Views in this blog do not promote, and are not directly connected to any L&G product or service. Views are from a range of L&G investment professionals, may be specific to an author’s particular investment region or desk, and do not necessarily reflect the views of L&G. For investment professionals only.

Engines of intelligence: Building silicon cathedrals

Physical infrastructure is driving the AI buildout. That is having macro implications, for better and worse.

Economists are often accused of being a grim lot. The practitioners of the “dismal science” since the time of Thomas Malthus are often accused of prophesying doom. But while dangers (demographic decline, geopolitical risks) are clearly observable, we are often surprised by unforeseen positive trends.

So with AI. Formal research into AI was once treated with derision. The New York Times once incredulously reported that Ilya Sutskever was paid $1m annually at a little-known non-profit called OpenAI[1]. The Guardian once quoted speculation that AI’s usefulness was becoming “sensationalised”. Although economists can be accused of discounting AI, it’s clear we weren’t alone.

Seven years later, AI is the central investment theme in equities, with excitement around model progress, profitability and productivity driving exuberance across sectors and markets.

We have written a previous series on the impact of AI, but in this new series we examine the effects of AI infrastructure’s buildout, the technology’s geopolitical ramifications, progress in the models and how markets have reacted.

From the ground up

The generative AI embedded in apps like ChatGPT runs on advanced chips needed to produce text, video and sound. Recent models can ‘reason’ (with impressive results) by using chips more intensively to refine answers. Ever-growing user numbers are also putting strain on chip infrastructure.

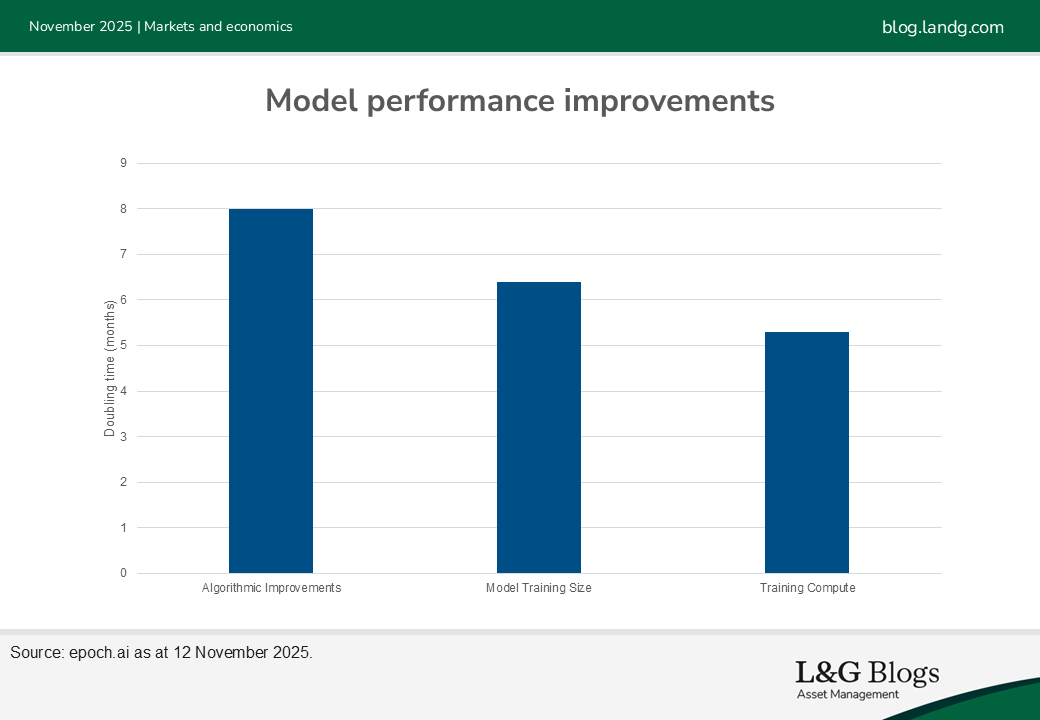

Simply put, that means the world needs lots of AI chips. Both to improve AI capabilities, and to service the technology’s growing userbase. Epoch.ai, a data provider, finds the demand for computation to train AI models is doubling roughly every 5 months. That’s outpacing the substantial algorithmic efficiencies and data volumes that have been wrung out, which are doubling effective AI-related computation every 8 months and 6.5 months, respectively. The rapid rise of chip stocks has reflected this need, with America’s five largest technology companies proposing to invest over $450bn between them in 2027.

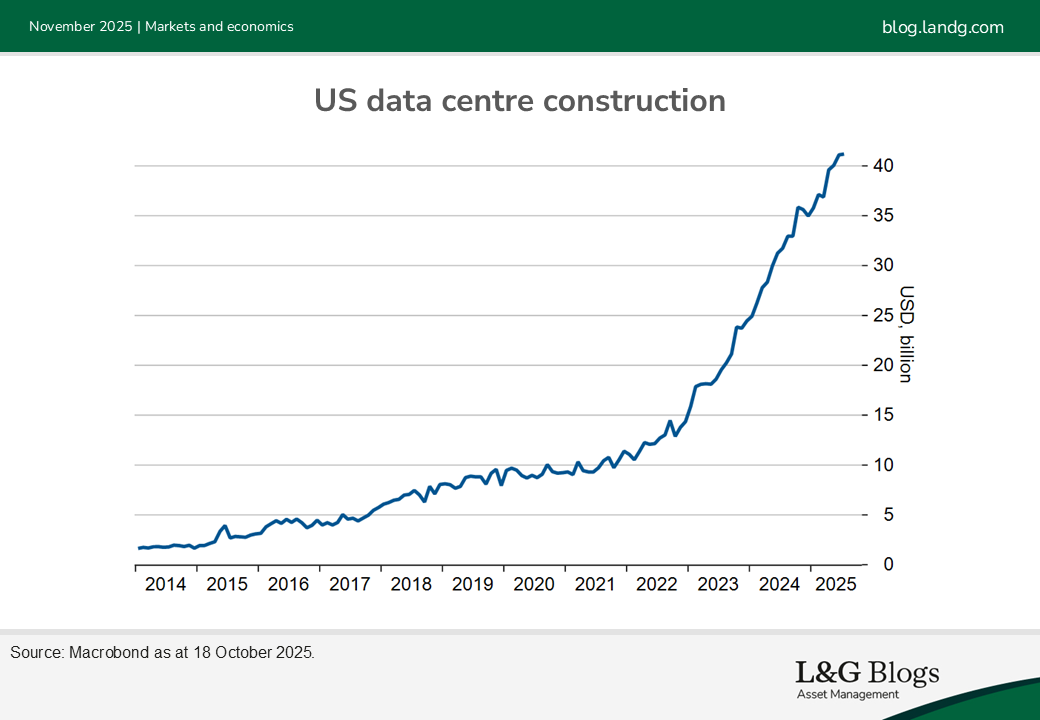

The insatiable demand for chips has correspondingly generated a boom in datacenter construction. America’s datacenter investment has tripled since end-2022, and a report by the consultancy McKinsey expects global datacenter capacity to rise 3.5-fold by 2030. The race in AI-enabled technology is being led by the biggest names in global tech, pouring vast sums to best their rivals. The report records construction costs for datacenters are only 10% of total, the rest being chips (~60%) and energy (~30%). Accordingly, if we scale reported datacenters construction investment by 10, we get AI capex numbers of $400bn.

Growing pains

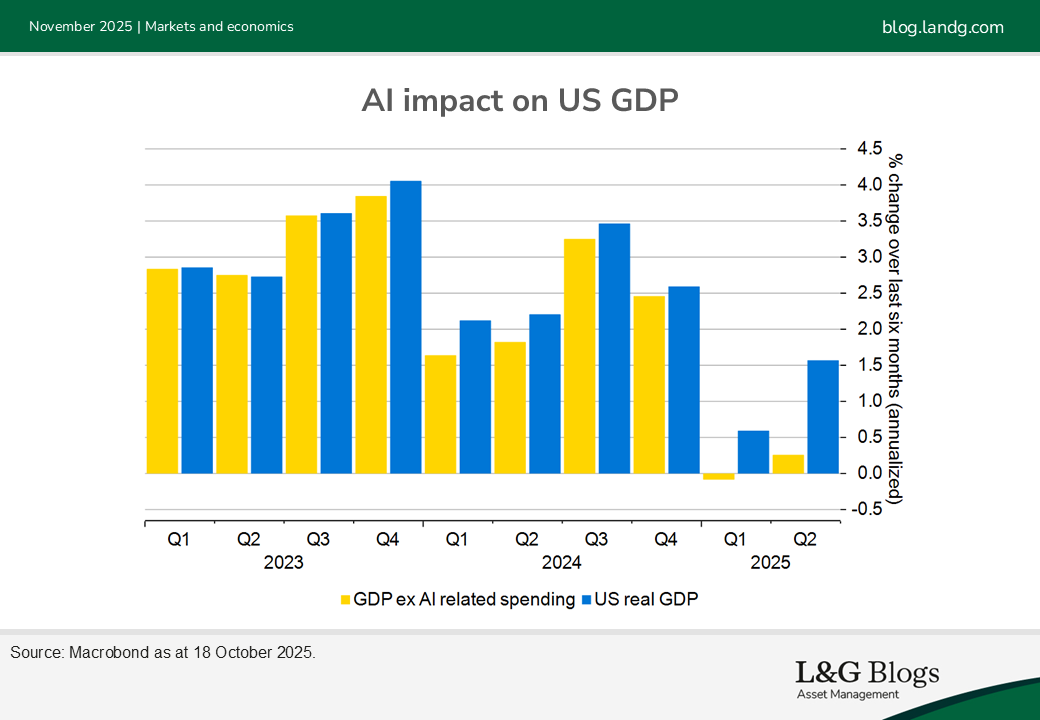

That scale of investment has macroeconomic implications. Folding in recent growth in computer software and hardware as AI-related and adding in data center construction, AI spending contributed 1ppt to US growth in the first half of the year, preventing a GDP contraction. According to economist Paul Kedrowsky, datacenter construction is comparable to the telecom boom’s effects in the early-2000s, though like-for-like comparison is tricky as much of the chips are made abroad.

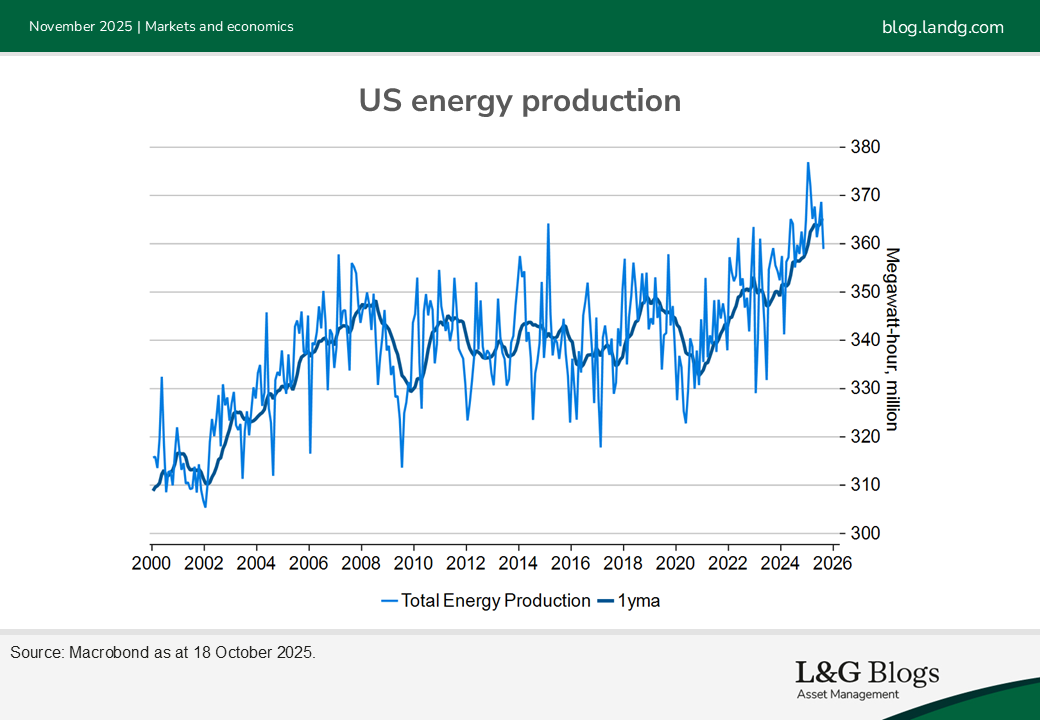

Yet AI expansion is having perverse effects in various ways. Electricity is sought after like never before, as the datacenter rollout stretches the world’s power grids. AI-related electricity demand is expected to reach 50GW by 2030, implying US power production must grow at its fastest rate since the early-1990s. Although new energy deals have been announced, in solar, geothermal, nuclear fission and even nuclear fusion, power prices have jumped.

Questions to answer…

But the buildout is also dogged by anxieties. Is the spending on these chips reaching diminishing returns? Some believe model releases from the labs have been disappointing. Others think the stock of data used to train the models is running out, stunting AI improvements. Others think that the method by which existing AIs are constructed is flawed, which limits how general their usage can be.

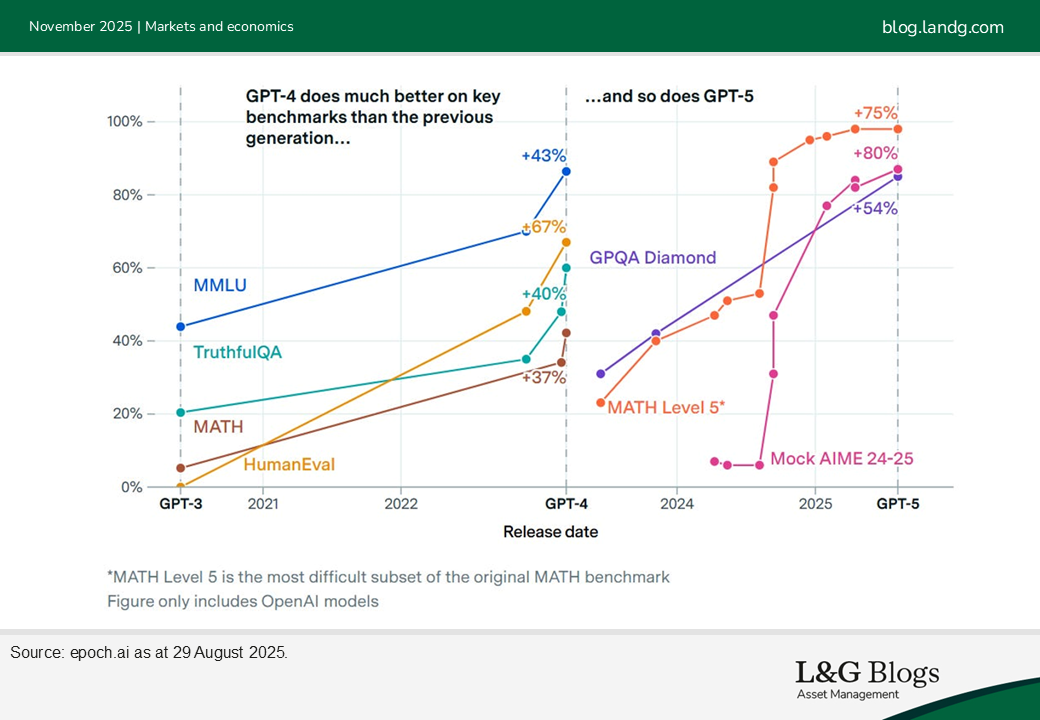

AI believers have answers to all anxieties. Doubts around scaling have been raised before, and have so far proved baseless. Claims of model slowdowns are likely also spurious – GPT3, 4 and 5 all saw similar improvements over similar times. The data wall only covers public data, and many AI firms can unearth more, either internally or from external providers.

While the debates around how fruitful all this spending proves to be will rage on, the AI boom is a reminder for all of us that some surprises can be positive after all.

This is the first in a series of blog posts covering the AI buildout; future instalments include a review of AI’s geopolitical impacts, an overview of AI model performance and a comparison of the current equity mood with the exuberance of the late 1990s.

[1] For illustrative purposes only. Reference to a particular security is on a historic basis and does not mean that the security is currently held or will be held within an L&G portfolio. The above information does not constitute a recommendation to buy or sell any security.

Recommended content for you

Learn more about our business

We are one of the world's largest asset managers, with capabilities across asset classes to meet our clients' objectives and a longstanding commitment to responsible investing.