Disclaimer: Views in this blog do not promote, and are not directly connected to any L&G product or service. Views are from a range of L&G investment professionals, may be specific to an author’s particular investment region or desk, and do not necessarily reflect the views of L&G. For investment professionals only.

Generative AI in data centres part 2: The evolution of GenAI and data centre investment implications

In the second of our latest digital infrastructure blog series, we explore the evolution of GenAI and its possible impact on demand for data centre assets.

Matching the fast-changing needs of artificial intelligence (AI) with data centres that take years to build creates challenges and risks, making asset selection ever more important. In part 1 of this blog series, we considered physical infrastructure needs for AI. Here, part 2 explores GenAI’s evolution and its potential impact on future data centre demand.

A question of power

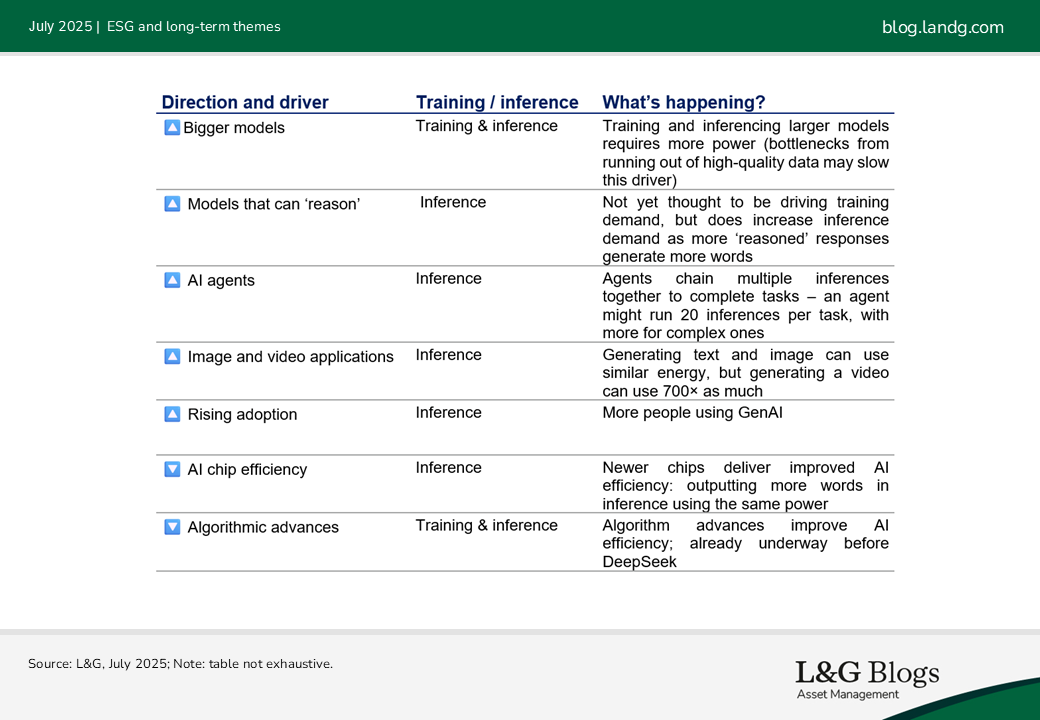

GenAI has seen upward drivers that increase data centre power demand, and downward drivers that reduce it. Despite rapid innovation creating uncertainty in forecasts, indicators suggest we should see continued growth in the medium term.[1]

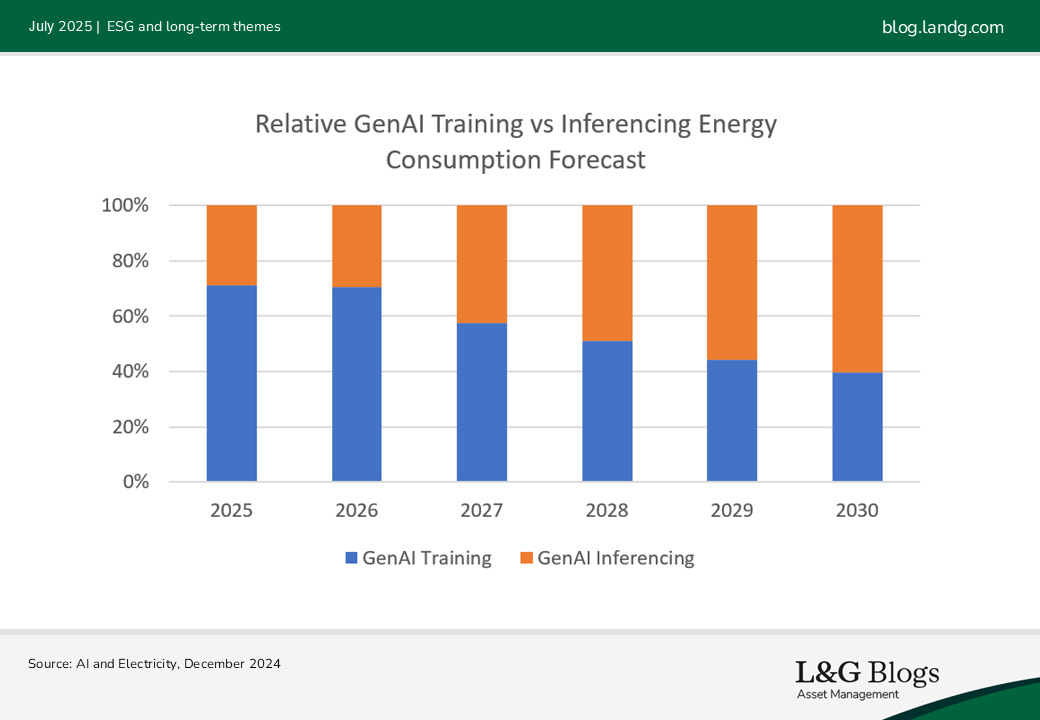

These drivers impact training (building/creating GenAI models like ChatGPT) and inferencing (using/prompting ChatGPT) differently. This influences the investment strategy for the supporting infrastructure.

Data centres built for AI inference could see increasing demand

AI power demand could shift from training/building GenAI models towards inferencing/using them because:

i) Training ever-larger AI models may no longer boost performance as we’re running out of high-quality data to train them with3

ii) Improved performance now from increasing inference with reasoning models – they are given more ‘thinking time’ when being used

iii) GenAI adoption is rising[2]

iv) Agents can do longer tasks, enabling more applications[3]

v) The additional learning step for reasoning models could now also incorporate inferencing[4],[5]

We anticipate that these trends will support investment returns in data centres built for AI inference.

As well as seeing relatively more demand, data centres built for inference may have more resilience against obsolescence risk – the risk of assets losing value faster or earlier due to rapid technological change. They require less power than those for training but may need lower latency – i.e., the time taken for data to travel between the data centre and end users needs to be lower. This translates to needing greater fibre connectivity and proximity to businesses and population centres. These locational requirements enable AI inference facilities to be more adjustable to serve cloud or enterprise workloads.

As AI agents take on more tasks of rising complexity, not all will require low latency, allowing some inference workloads to shift to more remote locations over time (given sufficient connectivity). This trend would be more feasible for hyperscalers, in our view.

For enterprise tenants running their own AI chips and leasing space in colocation facilities, we believe physical access, and so locational proximity, remains important.

Despite the possible shift away from AI training, we still expect strong demand for training infrastructure in the medium term, signalled by plans to build GW-scale data centres, potentially to meet future training needs.

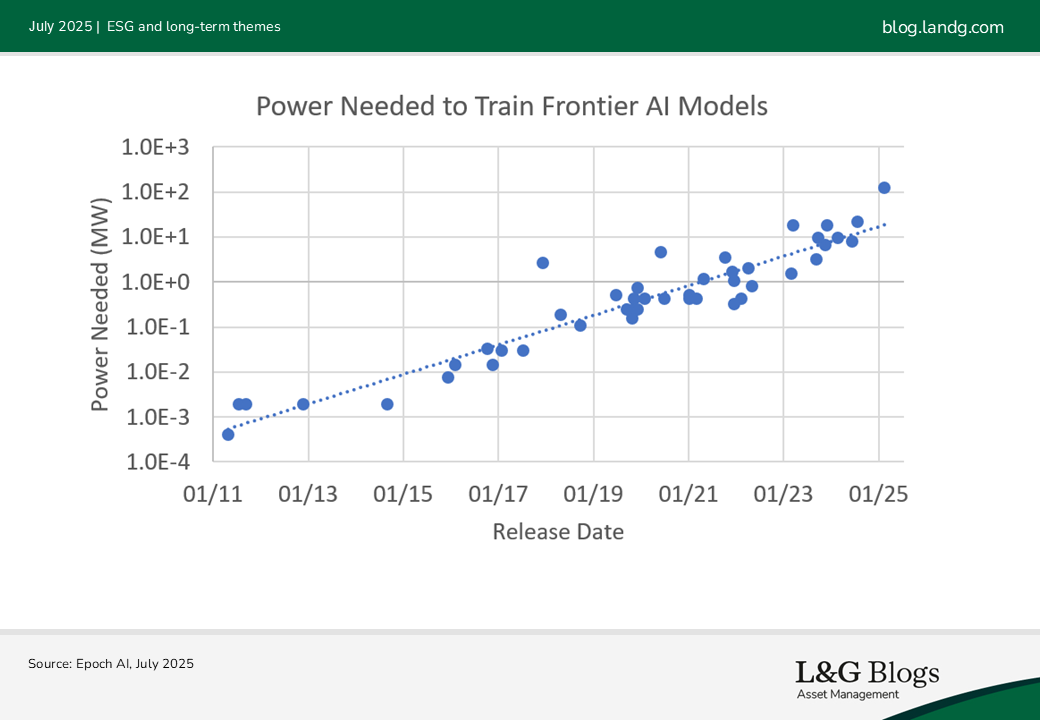

While the power used in training leading AI models doubles every year, data centres have multi-year construction times. This mismatch suggests faster obsolescence risk for data centres built for AI training.

GW-scale data centres – very large facilities that can use all the power generated from a nuclear power plant – are emerging despite this risk. Once built, they could train the best-performing GenAI models and take significant market share of AI training.

AI training facilities require a lot of power but not low latency, enabling remote builds where electricity is cheaper. However, limited fibre connectivity in these areas reduces their suitability for other cloud or enterprise uses, raising additional obsolescence risks.

Although GW-scale centres also face these obsolescence risks, near-insurmountable barriers to build facilities with even more power enables greater resilience.

While data centres constructed with 100 MW-scale power (10× less than GW-scale) and designed for AI training could currently secure hyperscale leases, they may lose AI training market share once the larger GW-scale facilities launch, so may face greater obsolescence risk. However, if AI agents can tolerate higher latencies, inference could happen in more remote locations, providing alternative uses for more distant sub-GW AI data centres. Overall, their long-term re-leasing prospects are less clear.

New entrants provide a more diversified tenant base and bring a higher risk premia

GenAI is diversifying the data centre tenant base, driven by increasing AI demand. A key newcomer is the AI neocloud: firms like CoreWeave that lease power, deploy AI chips, and offer access to their AI clusters like a cloud service. As they partner more closely with major GenAI players, their influence on AI data centre leasing is growing.

Proprietary GenAI models, known as ‘closed-source’ models, usually run in data centers owned by Big Tech hyperscalers. In contrast, ‘open-source’ models can be run by smaller companies in colocation spaces. As open-source models get closer in performance to the proprietary ones, more of the demand for AI could shift to being from smaller players instead of the Big Tech firms.

While these trends diversify the tenant base for colocation operators, they may bring weaker credit profiles and shorter contracts while still leasing a lot of power, reducing cash flow stability and increasing the risk premia compared to hyperscaler deals. For investors with the right risk appetite and capability, however, we see it representing an opportunity to go up the risk spectrum.

These open models are also an enabler of AI sovereignty. In part 3, we consider how AI sovereignty could shape investment opportunities, particularly in Europe.

[1] Situational Awareness, June 2024

[2] Trends – Artificial Intelligence, Mary Meeker, Jay Simons, Daegwon Chae, Alexander Krey, May 2025

[3] Measuring AI Ability to Complete Long Tasks, March 2025

[4] SemiAnalysis, Scaling Reinforcement Learning, June 2025

[5] Reinforcement learning could use more synthetic data in training, which is created using inferencing, so inferencing could be used more in training

Recommended content for you

Learn more about our business

We are one of the world's largest asset managers, with capabilities across asset classes to meet our clients' objectives and a longstanding commitment to responsible investing.