Disclaimer: Views in this blog do not promote, and are not directly connected to any L&G product or service. Views are from a range of L&G investment professionals, may be specific to an author’s particular investment region or desk, and do not necessarily reflect the views of L&G. For investment professionals only.

Generative AI in data centres part 1: A primer

In this first of a three-part series, we look at how GenAI is fuelling a surge in data centre investment, enabling the development of facilities that can house and cool high-power AI chips.

Data centres play a key role in powering generative AI (GenAI) tools and large language models (LLMs) like ChatGPT. There are two key processes in AI: ‘training’ and ‘inferencing’. First, during training, the LLM learns by analysing huge amounts of text and data. Then, when a user types in a question or prompt, the LLM responds using what it’s learned - the inferencing process. Data centres support this by providing the electricity, cooling, and space needed to run the powerful AI chips that handle both processes.

Data centre construction costs depend on power needs, approximating around ~$12 million per megawatt (MW).[1] Therefore, as AI power demands grow, so do capital requirements, creating, in our view, an investment opportunity with increasing barriers to entry.

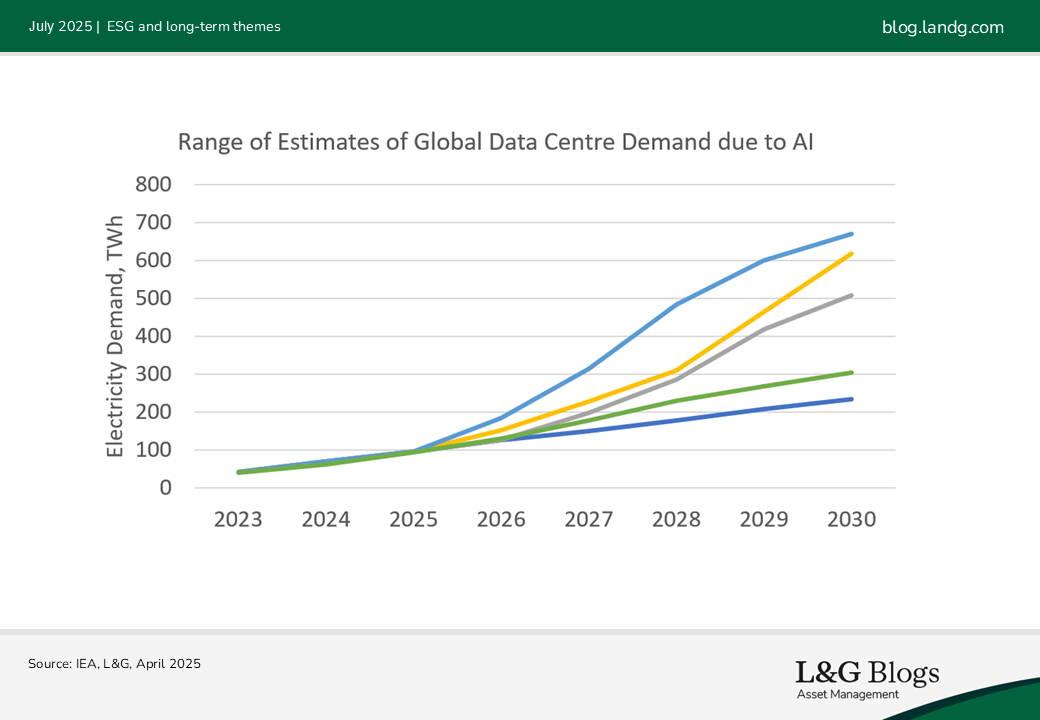

Three main factors are driving demand: cloud growth, data sovereignty and AI. Outsourcing IT infrastructure and processes to cloud services has enabled strong cloud growth, which could continue to 2030[2]. Data sovereignty is expected to drive demand in Europe as nations seek local data processing and storage. GenAI adds a newer layer of global demand, and emerging AI sovereignty could, in our view, similarly direct this towards Europe.

At 2024-end, estimations place AI using approximately 10% of data centre capacity[3]. While this share is expected to grow, the extent remains uncertain due to factors like long-term adoption rates and further DeepSeek-like efficiency innovations. Therefore, investors may wish, in our opinion, to balance exposure, allocating a proportion of data centre capacity to AI while maintaining a diverse tenant base including cloud-based businesses for stable returns.

Data centre investment entry points and AI readiness

Investing in stabilised data centres, new developments, or platforms containing both that support advanced AI chips, offers exposure to GenAI-driven growth.

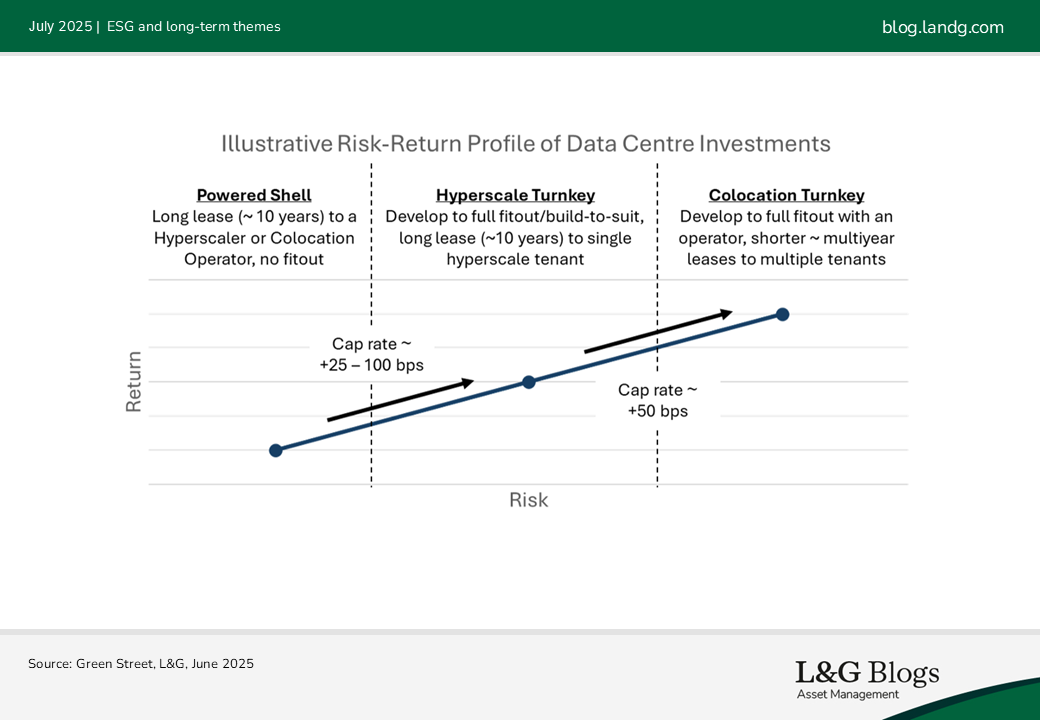

New developments involve securing power and building a powered shell, a power-connected building with no technical interior fit-out like cooling systems. These can be leased or co-developed with co-location operators or major tech firms (often called hyperscalers).

Hyperscaler deals often involve 10 to 15-year build-to-suit leases where the hyperscaler is the only tenant. Turnkey co-location facilities include full technical buildout, ready for multiple tenants to move in on shorter leases. As operational responsibility grows and lease terms shorten, cap rates increase.

Big Tech companies (i.e., the hyperscalers) operate large-scale AI-capable data centres, which can be self-built or developed with investor funding, and provide today’s leading GenAI models. Co-location data centres lease power to tenants (including to hyperscalers) for housing AI chips.

AI-capable data centres need different technical infrastructure in the buildout. As newer AI chips use more power, they generate more heat, increasing cooling requirements and bringing retrofitting challenges to older facilities. While older facilities may see less AI-driven demand, they could still serve cloud and enterprise tenants. The latest AI chips require liquid cooling, costing turnkey developments an additional $1 million per MW[4], but these costs are expected to be passed to tenants, especially in primary markets.

The fast rate of AI chip innovation could also challenge older data centres. New AI chips are much more efficient; they can generate far more text using the same amount of power. This means data centres with these newer chips can do a lot more without costing more to build. This could accelerate obsolescence of older AI-focussed facilities.[5] Therefore, investors should, in our view, prioritise facilities built for the latest AI chips, such as those with liquid cooling, and are adaptable to future generations.

Not all stabilised data centres in a platform may be GenAI-ready, though future developments are more likely to be, in our view. While data is limited on rental growth for AI-enabled facilities compared to those not enabled, GenAI’s greater forecasted growth than cloud suggests robust rental prospects.

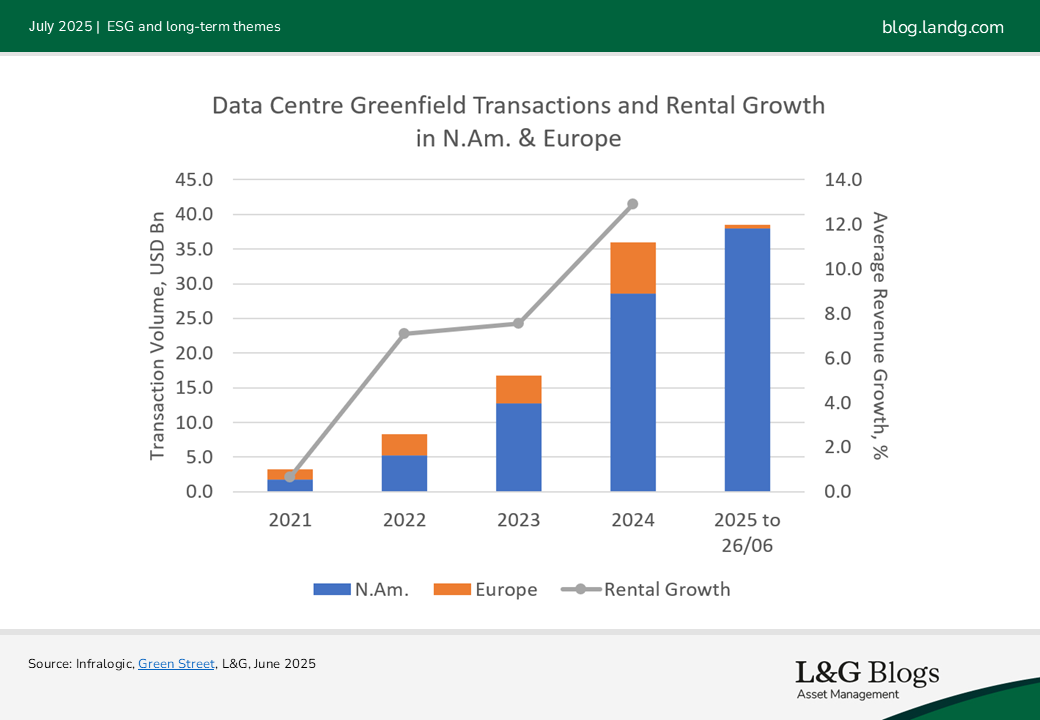

Demand for data centre development has increased significantly since 2022, when rental growth inflected upward[6], despite interest rate headwinds. As of mid-2025, ongoing challenges on timely access to power, exacerbated by grid bottlenecks, means that demand continues to outstrip constrained supply, intensified by GenAI. This imbalance could subside towards 2030, when current developments come online.[7]

Different AI processes have different location requirements

Training leading LLMs requires large AI chip clusters in data centres with 100 MW to 1 GW power[8]. As training isn’t latency-sensitive, it can occur in remote areas with cheaper electricity and more limited fibre access, away from business hubs and primary data centre markets.

In contrast, inferencing is typically done in smaller data centres with less centralised power but may require lower latencies and, therefore, greater fibre connectivity and proximity to end users, aligning with primary data centre market locations. Co-location operators near population centres with AI-enabled facilities are well-positioned to benefit from growing inferencing demand, in our view.

In part 2, we’ll explore the investment implications for how training and inference could develop.

[1] Green Street, Data Centre Insights, June 2025

[2] Synergy Research Group, August 2024

[3] Goldman Sachs Research as of Q4 2024

[4] Green Street Conference Insights, January 2025

[5] Trends – Artificial Intelligence, May 2025, Mary Meeker, Jay Simons, Daegwon Chae, Alexander Krey

[6] Green Street as of May 2025

[7] Citi Research, Data Center GAINs, April 2025

[8] SemiAnalysis, 100,000 H100 Clusters, June 2024

Recommended content for you

Learn more about our business

We are one of the world's largest asset managers, with capabilities across asset classes to meet our clients' objectives and a longstanding commitment to responsible investing.